- Diagram of recommended workflow from Mari.

- Bash script that takes one argument, which is the name of the new project, and creates that project with a pre-made directory structure (rename this file to make_project.sh when you download it: the website does not allow that extension for filenames).

The following list is from Janice Derr's book Statistical Consulting: A Guide to Effective Communication (2000, Duxbury Press):

- What is your role?

- What are the roles of others on the project?

- How will communications be maintained?

- What are the "deliverables"?

- What are the deadlines?

- How will you be compensated for your participation?

- What are acceptable statistical practices?

- What are the ownership rights?

- What stipulations are there for security and confidentiality?

- When is a project finished?

Links to document and website references for programming, research, and statistics.

Data science and programming:

- Advanced R - Hadley Wickham (webbook)

- R for Data Science - Hadley Wickham and Garrett Grolemund (webbook)

- Targets package - a pipeline tool that can help reduce code runtime

- R packages and functions for making presentation-ready tables:

- arsenal package: tableby() presents descriptive statistics by the levels of a categorical variable (Table 1).

- table1 package: presents descriptive statistics by the levels of a categorical variable for HTML output (Table 1).

- gtsummary package: "The {gtsummary} package summarizes data sets, regression models, and more, using sensible defaults with highly customizable capabilities." While this is more difficult to use than tableby(), it can do much more and is more customizable. Here's a helpful 52-minute video on gtsummary by its creator.

Research:

Statistics:

- ANOVA - Type I/II/III SS explained here and here

- Bayesian Data Analysis with BRMS (Bayesian Regression Models Using Stan) (youtube video) "Mitzi Morris shows how you can quickly build robust models for data analysis and prediction using BRMS. After a brief overview of the advantages and limitations of BRMS and a quick review of multi-level regression, we work through an R-markdown notebook together, to see how to fit, visualize, and test the goodness of the model and resulting estimates. A link to the slides is available in the video description."

- CONSORT statement for statistical reporting of RCTs

- STROBE statement and checklists for statistical reporting of observational studies

- EQUATOR Network (Enhancing the QUAlity and Transparency Of health Research): has CONSORT (for RCTs) and STROBE (for observational studies) guidelines as well as PRISMA (for Systematic Reviews) and many others for different types of studies

- Reference Collection to push back against "Common Statistical Myths" a list of references that may be used to argue against some common statistical myths or no-nos - link from Erin

The following books are found on the shelves by the printer:

- A Marginal Regression Modeling Framework for Evaluating Medical Diagnostic Tests. Statistics in Medicine, Vol 16. Wendy Leisenring, Margaret Sullivan Pepe, Gary Longton

- Epi-Genetics. The Ultimate Mystery of Inheritance. Richard C Francis

- Epigenetic Epidemiology. Karin B Michels

- Genome Mapping and Genomic in Human and Non-human Primates. Duggirala, Almasy, Williams-Blangero, Paul, Kole

- HTML and XHTML. The Definitive Guide. Chuck Musciano and Bill Kennedy. 4th Ed.

- Increasing the Quantity and Quality of the Mathematical Sciences Workforce through Vertical Integration and Cultural Change. Margaret Barry Cozzens

- Introducing the UNIX System. Henry McGilton, Rachel Morgan

- Mathematical and Statistical Challenges for Sustainability. NSF workshop report, Nov 2010. Julie Rehmeyer

- Mathematical Statistics with Mathematica. Colin Rose, Murray D. Smith

- Measurement Theory and Practice. The World through Quantification. David J. Hand

- MySQL. The definitive guide to using, programming, and administering MySQL 4 databases. 2nd Ed. Paul DuBois

- SQL Antipatterns. Avoiding the Pitfalls of Database Programming. Bill Karwin

- Statistical Computing with R. Maria L. Rizzo

- Statistical Design. George Casella

- The Little SAS Book (A Primer). 4th Ed. Lora D. Delwiche Susan J. Slaughter

Please find below information on conference travel. Stat Lab supports your professional growth by covering registration and travel expenses, up to $2,000 per fiscal year.

To start the process for attending a conference:

1. Send an email identifying the conference to both Peter Powers-Lake (peterp1@email.arizona.edu) and Jennifer

2. Submit the Travel Authorization form. Be sure to download the form before filling it out or all the information will be lost. The Travel Authorization Form is required by the university to be submitted through Adobe Sign (link at the bottom of the Travel Auth form). If the travel is not connected to a grant, please route it to Peter: peterp1@email.arizona.edu. No need to route to a Fund Approver also.

Reimbursable expenses

UA travel policy overview brochure and detailed information. In short, expenses that can be reimbursed are:

- Conference registration

- Airline ticket stubs

- Meal expenses within the per diem breakdown, that are not provided by the conference

- Lodging and incidentals

- Bus/subway/shuttle/taxi fare

- Car rental/fuel/tolls

- Parking (outside of lodging charges)

Following the conference, be sure to provide itemized receipts for expenses within 1 week after travel to Peter for reimbursement.

For questions, please contact Jennifer.

Below is a list of useful websites commonly used by Stat Lab personnel:

- Room reservations for BSRL. The main BSRL page takes you there too. There's also the AHSC scheduler for rooms in BSRL and other nearby buildings. The system is slow most of the time, so patience is needed. Be sure to put in a password when making a reservation (any password works), or other folks can change or delete your reservation. Here are maps of available conference rooms.

- Room reservations for Keating. This can also be linked to through the BIO5 resources page (at top right of bio5.org). The system is not very intuitive and first floor rooms require a KFS number.

- UAccess - used to access UArizona related personnel information, such as time reporting, benefits, etc.

- BIO5 IT and facilities support - used to submit a ticket for help.

- Keating BIO5 forms and templates - FedEx forms, BIO5 room rental, and conference room diagrams.

- UArizona brand resources - downloadable templates for Word, Power Point, etc.

- Financial services - used for a multitude of financial information.

- University closures, holidays, and time reporting codes - information regarding dates, compensation policy, and explanations of time reporting codes.

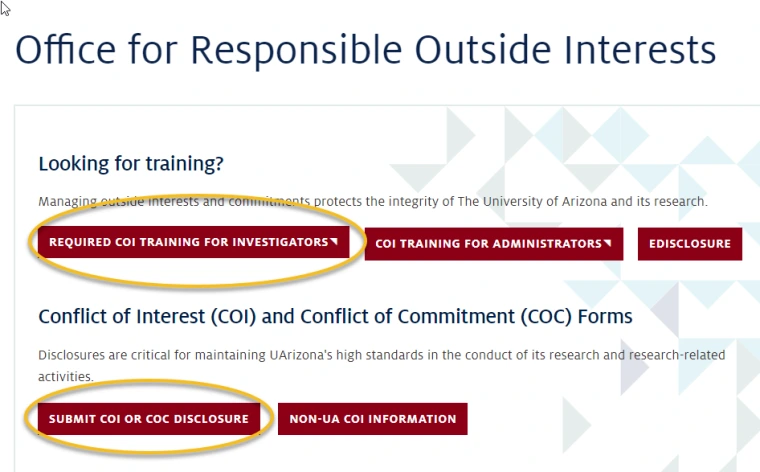

- Conflict of interest (COI) training and disclosures - after completing the training, you will need to wait overnight before submitting the disclosure. Importantly, when you complete the training and disclosure, please send Janet the dates of completion, and she will store them for Stat Lab records.

- HIPAA training information

- Request Keating access and BSRL third floor access

- BSRL Visitor - grants access to the first floor entrances, the south side of 2nd floor BSRL and 3rd floor.

- Keating Visitor - grants access to the first floor entrances and elevators (except the basement).

- BSRL/Keating Bridge - grants access to the bridges between Keating and BSRL.

- Fourth floor BSRL requires permissions. If you find you need access, let Janet know, and she can help get that started.

- University of Arizona email signature generator

- Mac Users: Firefox works best

- Windows Users: Chrome works best

- If you would like to have the social media links support BIO5, here are what the links should be:

- Facebook - https://www.facebook.com/UAZBIO5

- Twitter - https://twitter.com/UAZBIO5

- Instagram - https://www.instagram.com/UAZBIO5

- LinkedIn - https://www.linkedin.com/company/uaz-bio5-institute

Conflict of Interest (COI) training and disclosures: After completing the training, you will need to wait overnight before submitting the disclosure. This could be important whenever you are listed on a grant proposal, in which case a missing COI can potentially hold up routing or funding. Importantly, when you complete the training and disclosure, please send Janet the dates of completion, and she will store them for Stat Lab records.

Image

- University of Arizona Human Resources guidelines for new employees

- Subscribing to BSRL / Keating email lists

- Manage email lists: https://list.arizona.edu/sympa/

- Instructions for adding/deleting lists: https://uarizona.service-now.com/sp?id=kb_article_view&sysparm_article=KB0010095

- List names

University of Arizona Statistics Consulting Laboratory Facilities and Resources

The Statistics Consulting Laboratory (Stat Lab) offices and computing facilities are located in the Bioscience Research Laboratory Building at UArizona (~700 sq. ft.). The Stat Lab maintains a modern desktop computing environment via networked Unix-based, Apple, and MS Windows computers. Data integrity is maintained by hourly incremental back-up. Internet access is provided by campus-wide 100GB Internet2. Software available on Stat Lab computers includes the R statistical software environment, python and Perl scripting languages, TeX document preparation system, as well as other Unix-based computing tools and programming languages (e.g., C++, MySQL). In addition, there is a full line of Microsoft products including Access, Excel, Word, and PowerPoint. Other statistical software available includes SAS, Stata, Stan, and JAGS, among others. The Stat Lab makes extensive use of reproducible research computing tools (described below), whereby all data management and statistical analysis code is embedded into a single document. Thus, analysis results can be computed dynamically from raw data, and automatically included in an executable document. Moreover, the data and document together constitute an auditable history of the analysis project. In addition, Stat Lab statisticians make use of the Rshiny environment for rapid development and deployment of novel analysis tools (see below). Finally, for clinical and community-based research trials, Stat Lab statisticians frequently make use of the Research Electronic Data Capture software (REDCap). REDCap is a secure web application for building and managing online surveys and databases. While REDCap can be used to collect virtually any type of data (including 21 CFR Part 11, FISMA, and HIPAA-compliant environments), it is specifically geared to support online or offline data capture for research studies and operations. The UArizona REDCap instantiation is hosted in a secure server environment maintained by the University of Arizona Health Sciences Center.

Network and High-Performance Computing Resources: The Stat Lab infrastructure is connected to high bandwidth connections through UArizona campus to the 100GB Internet2 innovation platform, ensuring requisite bandwidth for high throughput projects. Computational infrastructure for analysis pipelines consists of 2748-core, 22.85 TFLOPS SGI Altix ICE 8400 and a shared memory SGI UV 1000 928-core, 9.87 TFLOPS, 2.87 TB RAM and IBM iDataPlex Altix 1248-core, 13.28 TFLOPS. This is supported by expandable high performance storage from Data Direct Network (DDN) 620TB capacity using IBM GPFS file system. 1200 core cloud platform Atmosphere (Openstack) with preconfigured images for RNAseq analysis and NGS viewers supported with an 900 TB iRODS (data management platform) for storing and managing NGS and high throughput imaging data via the web accessible BISQUE platform for image data management and analysis and workbench (Discovery Environment). Popular preconfigured and optimized analysis packages for RNAseq (Tuxedo suite etc.) and image processing applications are installed on all available platforms along with ancillary NGS bioinformatics and image-informatics tools and applications.

Reproducible research methods: The Stat Lab data analysis workflow routinely uses reproducible research methods using the R statistical programming language, the knitr package for dynamic document production, and the Git version control system (http://git-scm.com/) for performing all consulting and collaborative computation. Reproducible research tools allow the integration of text, formatting, and executable analysis code in a single ‘executable document.’ This combination provides a powerful tool for documenting and executing the entire computational path from initial data read to report generation. The entire computational history, including data recoding, missing value replacement, statistical analysis procedures, and graphics generation is included in the document and is completely reproducible by any user with access to the data and document file. This workflow creates an ‘audit history’ for results, and can be shared with other investigators. Operationally, the greatest benefit of these tools is to document our work for the benefit of our ‘future selves,’ and provides a vehicle for creating an institutional memory that lives beyond the tenure of any single individual. We routinely use these reproducible research methods for all Statistics Consulting Lab projects.

Rapid development and deployment of novel tools: The R statistical language is ideally suited for the rapid development of new analysis methods and functionality. New methods are easily shared with other R users as R Packages (http://cran.r-project.org/web/packages/), which incorporate associated functions into a single, efficient module. To share novel application (apps) with non-R programmers, we will utilize the Rshiny (http://shiny.rstudio.com/). Rshiny is an open source R package that provides a powerful web framework for building web applications using R. Shiny allows rapid deployment of analysis code into interactive web applications without requiring HTML, CSS, or JavaScript knowledge. This is a powerful tool for collaboration.

RNASeq data analysis pipeline: RNASeq data analysis requires multiple processing steps and quality control checks which have been standardized within the Stat Lab. This ‘pipeline’, summarized below, includes procedures for quality control, alignment and summarization, differential expression, and gene set analysis. These procedures evolve to reflect best practices within the field, and may be modified to fit the specific details of each experiment.

Quality control: Quality checks are performed both before and after alignment. Before alignment, FastQC (www.bioinformatics.babraham.ac.uk/projects/fastqc) will be used to analyze sequence and base quality, per sequence GC content, sequence duplication, and over-represented sequences. Post-alignment quality control will involve duplication detection, off target capture, strand specificity, GC bias, 3’ to 5’ ratio, coverage at each gene, and inter-experiment variability. Software such as Picard tools (http://broadinstitute.github.io/picard/), RNA-SeQC (1), RSeQC (2), and HTSeq (3) will be used for RNASeq pre- and post-alignment quality control.

Alignment and summarization: Alignment will be done using TopHat (4) to the whole genome to understand the impact of off target sequencing. We will use the CCDS (5) gene annotations for summarizing expression at the gene level. Raw counts as well as FPKM values will be computed for each gene. We will use CummeRbund (6) to summarize output from Cufflinks (7) for relationships between genes, transcripts, transcription start sites, and CDS regions. HTSeq and RSeQC can use both the raw alignment data in bam file or the FPKM values for assessing the quality of the data after alignment.

Differential expression: Differential expression analysis will be conducted using the R/Bioconductor (8) suite of tools. Our standard analyses use the edgeR (9) or DESeq packages (10) for differential expression. edgeR implements novel statistical methods based on the negative binomial distribution as a model for count variability, including empirical Bayes methods, exact tests, and generalized linear models. The package is especially suitable for analyzing designed experiments with multiple experimental factors but possibly small numbers of replicates. It has unique abilities to model transcript specific variation even in small samples, a capability essential for prioritizing genes or transcripts that have consistent effects across replicates. DESeq models the mapped count data using a negative binomial distribution, and accommodates over-dispersion of counts with respect to the Poisson. The differential expression analysis will produce a ranked list of differentially expressed genes. We use the Benjamini and Yekutieli (11) method for false discovery rate control.

Gene set analysis: A collection of genes related to a biological function or composing a signaling network are referred to as a gene set. Many gene sets are defined by Gene Ontology Biological Processes (GO) and the Kyoto Encyclopedia of Genes and Genomes (KEGG), as well as other databases (e.g., Biocarta, WikiPathways, Pathway Commons). Gene sets may also be specified by an investigator a priori, to investigate a collection of interest. We use the Globaltest procedure (12) to evaluate associations between gene sets and experimental factors. This procedure tests a specified gene set, using all genes in the set, without initial filtering by differential expression. Globaltest evaluates whether any genes in the set are associated with phenotype, and inference is based on subject resampling. By contrast, gene set enrichment (GSEA, 13) compares ranking of genes in the set with randomly selected genes, and inference is based on gene resampling. Independent evaluation (14) suggests that Globaltest provides improved performance in identifying connectivity among genes, revealing biological themes, and producing reliable results from small numbers of replicates.

References

- DeLuca, D. S., J. Z. Levin, A. Sivachenko, T. Fennell, M.-D. Nazaire, C. Williams, M. Reich, W. Winckler, and G. Getz. 2012. RNA-SeQC: RNA-seq metrics for quality control and process optimization. Bioinformatics 28:1530–1532.

- Wang, L., S. Wang, and W. Li. 2012. RSeQC: quality control of RNA-seq experiments. Bioinformatics 28:2184–2185.

- Anders, S., P. T. Pyl, and W. Huber. 2015. HTSeq-a Python framework to work with high-throughput sequencing data. Bioinformatics 31:166–169.

- Trapnell, C., L. Pachter, and S. L. Salzberg. 2009. TopHat: discovering splice junctions with RNA-Seq. Bioinformatics 25:1105–1111.

- Pruitt, K. D., J. Harrow, R. A. Harte, C. Wallin, M. Diekhans, D. R. Maglott, S. Searle, C. M. Farrell, J. E. Loveland, B. J. Ruef, E. Hart, M.-M. Suner, M. J. Landrum, B. Aken, S. Ayling, R. Baertsch, J. Fernandez-Banet, J. L. Cherry, V. Curwen, M. DiCuccio, M. Kellis, J. Lee, M. F. Lin, M. Schuster, A. Shkeda, C. Amid, G. Brown, O. Dukhanina, A. Frankish, J. Hart, B. L. Maidak, J. Mudge, M. R. Murphy, T. Murphy, J. Rajan, B. Rajput, L. D. Riddick, C. Snow, C. Steward, D. Webb, J. A. Weber, L. Wilming, W. Wu, E. Birney, D. Haussler, T. Hubbard, J. Ostell, R. Durbin, and D. Lipman. 2009. The consensus coding sequence (CCDS) project: Identifying a common protein-coding gene set for the human and mouse genomes. Genome Res. 19:1316–1323.

- Trapnell, C., A. Roberts, L. Goff, G. Pertea, D. Kim, D. R. Kelley, H. Pimentel, S. L. Salzberg, J. L. Rinn, and L. Pachter. 2012. Differential gene and transcript expression analysis of RNA-seq experiments with TopHat and Cufflinks. Nature Protocols 7:562-578.

- Trapnell, C., B. A. Williams, G. Pertea, A. Mortazavi, G. Kwan, M. J. van Baren, S. L. Salzberg, B. J. Wold, and L. Pachter. 2010. Transcript assembly and quantification by RNA-Seq reveals unannotated transcripts and isoform switching during cell differentiation. Nature Biotechnology 28:511–515.

- Gentleman, R. C., V. J. Carey, D. M. Bates, Ben Bolstad, M. Dettling, S. Dudoit, B. Ellis, L. Gautier, Y. Ge, J. Gentry, K. Hornik, and F. Leisch. 2004. Bioconductor: open software development for computational biology and bioinformatics. Genome Biology 5:R80.

- Robinson, M. D., D. J. McCarthy, G. K. Smyth. 2010. edgeR: a Bioconductor package for differential expression analysis of digital gene expression data. Bioinformatics 26:139-140.

- Anders, S., and W. Huber. 2010. Differential expression analysis for sequence count data. Genome Biology 11:R106.

- Benjamini, Y., and D. Yekutieli. 2001. The control of the false discovery rate in multiple testing under dependency. Ann. Statist. 29:1165-1188.

- Goeman, J. J., S. A. Van De Geer, F. De Kort, and H. C. van Houwelingen. 2004. A global test for groups of genes: testing association with a clinical outcome. Bioinformatics 20:93–99.

- Subramanian, A., P. Tamayo, V. K. Mootha, S. Mukherjee, B. L. Ebert, M. A. Gillette, A. Paulovich, S. L. Pomeroy, T. R. Golub, E. S. Lander, J. P. Mesirov. 2005. Gene set enrichment analysis: A knowledge-based approach for interpreting genome-wide expression profiles. PNAS 102:15545-50.

- Hua, J., M. L. Bittner, and E. R. Dougherty. 2014. Evaluating gene set enrichment analysis via a hybrid data model. Cancer Informatics 13:1–16.

University of Arizona Cyberinfrastructure and Research Support Facilities

UArizona researchers have access to scalable computing systems, data management platforms, institutional data repositories, and visualization environments that are connected through a reliable campus network. These platforms provide multiple services at no cost to researchers, and with generous allocations, learning, and training avenues. These services and capabilities are provided by key institutionally supported organizations outlined below, which collectively facilitate scholarly pursuits and computational productivity for research projects throughout their lifecycles.

University Information Technology Services (UITS) maintains institutionally funded research computational infrastructure and consulting for High Performance Computing (HPC), Visualization, and Statistics: available at no cost to all campus researchers. UArizona researchers may fund additional HPC compute nodes centrally managed as part of the larger system, with high-priority access to purchased nodes. Unused compute cycles from buy-ins are available to other campus researchers, at a lower priority. Buy-in can improve grant competitiveness due to lower total costs of ownership that must be included in the grant (i.e. hardware only, no operations costs); evidence of campus cost‐sharing; and more positive funding agency review of cost‐effective centrally‐administered facilities. Systems available to researchers:

- El Gato general purpose cluster from NSF MRI (2013) with 2176 Intel cores, FDR IB interconnect, 26TB of RAM, 140 NVIDIA Tesla K20X GPUs.

- Ocelote general purpose cluster with 11528 cores, 48 NVIDIA Tesla P100 GPUs, 57TB of memory, FDR Infiniband Interconnect, 10G ethernet, and virtual shared memory software.

- Eyra an isolated testing platform dedicated for prototyping and proof of concept studies: 51 nodes with 2x12 Intel 2.67GHz X5650 cores; 64GB RAM; 1 x 2TB SATA disks.

- The primary HPC cluster Puma provides:

- 48 Altus XE2242 CPU Nodes with 96 CPU x 4 = 384 CPU, 2.4GHz, 512GB RAM per node.

- 6 Altus XE2214GT GPU Nodes with 96 CPU, 2.4GHz, 512GB RAM, 4 32GB V100s per node.

- 2 Altus XE1212 High Memory Nodes with 96 CPU, 2.4GHz, 3072GB RAM per node.

- Total CPUs: 19,200 2.4 GHz AMD (EPYC 7642 Rome).

Research Computing Support includes multi-core/processor programming, support for scaling and benchmarking of parallel code, Singularity container technologies, and expertise in specialized accelerator technologies such as NVIDIA general purpose graphical processing units (GPGPU or GPU). Computing resources are housed in the Research Data Center (RDC), a 1200 ft2 raised floor data center dedicated to centrally managed research computing systems and large grant funded systems. The 1900 ft2 Co-location Data Center provides space for systems purchased and administered by colleges, departments and research projects. UITS data centers have badge swipe access with two-factor authentication and video surveillance. Data centers are monitored 24/7 by Operations staff; automated environmental and system monitoring is in place. All personnel with unescorted data center access have undergone background checks and are required to be US Citizens. UITS provides and manages secure research environments within AWS GovCloud to support requirements for CUI and Export Controlled research.

UArizona is a member of Internet2 (I2), a not-for-profit provider of a 100Gb ultra-high speed backbone network for advancing Research and Education. The connection from UArizona to Sun Corridor is a primary 100Gb circuit and a secondary 10Gb circuit via two redundant edge routers. Sun Corridor is connected to I2 via dual 100G connections. The UArizona campus network consists of eight redundant core routers in two core sites and eight distribution routers in three distribution locations. The core and distribution layer devices are interconnected at multi-100Gbps, and most campus buildings are connected at 10Gbps. All campus buildings are dually connected to campus distribution points. Science DMZ and Data Transfer Nodes (DTN) for high volume data transfers is deployed at the university network perimeter, outside the border firewalls, via direct connections to a campus DMZ/Edge at multi-10Gbps, Science DMZ is secured via static access lists deployed on two redundant, high throughput L3 dedicated switches.

UArizona Health Sciences (UAHS) Office of Data Science Services (ODSS) in collaboration with UITS provides a secure analysis enclave Soteria that provides access to key infrastructure for processing and analyzing data the has PHI/HIPAA constraints. Soteria includes popular data science platforms such as Jupyter notebooks, Rstudio, Streamlit etc. provided through RStudio Connect. Additionally, popular medical imaging research platforms such as XNAT and dedicated cloud (AWS) and HPC systems that meet the compliance needs are provided. All security and compliance needs are managed institutionally.

CyVerse, funded by the National Science Foundation, provides scientists with powerful computational infrastructure to handle huge datasets and complex analyses, enabling data-driven discovery and painless collaboration. CyVerse’s extensible platforms provide data storage, bioinformatics tools, image analyses, cloud services, APIs, and more. CyVerse computational grid clusters (1500 processing cores), virtualization clusters (Atmosphere cloud service platform) servicing more than 3000 virtual machines with 8TB RAM (maximum resources per instance is 32-cores and 256G RAM), CyVerse’s data services, and 7 PB of storage are located in two data centers at UArizona, connected with high performance 10G networking. A facility at the Texas Advanced Computing Center (TACC) stores a full backup of the CyVerse Data Store, updated nightly. The CyVerse Collaborative and TACC’s facilities have 10G connectivity using Internet2 via Sun Corridor networking. TACC resources include:

- 18 petaflops of peak performance

- 4200 Intel Knights Landing 68 core nodes, 96GB DDR RAM, 16GB high speed MCDRAM

- 1736 Intel Xeon Skylake nodes, each with 48 cores and 192GB of RAM

- 100 Gb/sec Intel Omni-Path network

- Lustre file systems with a storage capacity of 31PB

- TACC's Stockyard-hosted Global Shared File System provides additional Lustre storage

CyVerse provides specific tooling to support Machine Learning (ML) workflows, these include support for dedicated instances of the MLflow ML lifecycle manager that comprises of a public facing model registry and multi-tenant Kubernetes cluster with support for federation with external clusters. Contemporary workflow management systems like Argo, Makeflow and WorkQueue along with Pegasus and Condor are supported. These are integrated with observation and monitoring (Grafana, Prometheus) platforms and data management system (iRODS), collectively providing the requisite MLOps (Machine Learning Operations) support for diverse workloads.

If computational resources beyond UArizona and CyVerse are needed, the XSEDE national supercomputer network, including the Jetstream cloud-based computing platform, may be employed.

UArizona Libraries’ Office of Digital Innovation and Stewardship (ODIS) offers training and consultation on data-intensive research and digital scholarship. ODIS supports open access and data sharing. Through partnerships and collaborations, ODIS provides access to distinctive digital collections and the scholarly record of UArizona. Libraries host weekly drop-in hours for R and Python programming, GIS, research data management, and provide ongoing topical workshops and events.

UArizona Libraries’ Research Data Management Services (RDMS) unit supports the Open Science Framework, DMPTool for data management plans, and provides consultative services:

- Writing data management plans (DMPs) for grant applications.

- Data management strategies and workflows for research projects and research teams.

- Data Archiving, documentation, metadata, and publishing.

- Supporting best practices in spreadsheets and databases.

- Reproducible research.

To foster open, reproducible, and collaborative research, UArizona Libraries are developing data services to provide responsible curation for research datasets and related supplementary outputs (e.g., software/code, media) generated through research activities by UArizona-affiliated researchers. Their interest is in sharing research products that can be made public, and the data repository is not meant as a general data store for private information, data related to the business functions of the University, or any kind of Regulated data.

UArizona Libraries provide workshops and consultations on a range of issues in scholarly communication, including identity management, open access, fair use, and copyright. They offer assistance with ORCID and DOIs, and host a selection of scholarly journals edited and managed by UArizona students, faculty, and affiliates. The UArizona Campus Repository facilitates open access to research, creative works, publications, and teaching materials. We collect, share, and archive content selected and deposited by faculty, researchers, staff, and affiliated contributors. Deposited content is discoverable via Google, Google Scholar, and other search engines. Eligible content includes pre-prints, departmental publications, technical reports, white papers, theses, and dissertations.

CATalyst Studios in the Main Library is part of the Student Success District and features a Data Studio, Virtual Reality Studio, and Maker Studio. Facilities are open for use by all campus and community members. Learning to use spaces is facilitated with workshops, user certifications, and drop-in support.

- Data Studio features a 6x3 Data Viz wall of Samsung UH46N-E displays, 11520 x 3240 pixels.

- Virtual Reality Studio features the latest tethered and stand alone headsets, including Oculus Rift, HTC Vive, and Oculus Go, as well as stations for 3D modeling and a green screen cyclorama.

- Maker Studio provides access to prototyping equipment for learning communities of all types. Technology includes 2 Epilog FusionPro laser cutters, Ultimaker 3 3D printers, Shapeoko CNC router, Roland Camm-1 Vinyl Cutter, Juki industrial sewing machine, arduino, and raspberry pi.